I would like to expand on one aspect of improving reporting performance

in SAP BW – exploiting the OLAP cache. The OLAP cache is standard

functionality that is setup during a technical install of SAP BW.

However, to realise the full benefits of speedy queries based on cached

data, some additional steps need to be taken post-install. I’ve noticed

these steps, and in particular the scheduled population of the OLAP

cache, has been overlooked on quite a few implementations.

In this post i’ll focus on the concept of ‘pre-filling’ the cache on a

regular basis through the Business Explorer (BEx) broadcaster. I’ll

also cover some points to consider in maintaining the OLAP cache and

performance tuning. Let’s use the functionality available in a standard

install to give your users the benefits of cached data!

Cache: A safe place for hiding or storing things.

A cache can be described as a buffer that’s employed to re-use

commonly occurring items. A cache has many applications in a computing

context: the memory cache, internet browsing history cache, disk

caching, etc. In all of these cases, caching provides an answer or data

retrieval, faster than another alternative. Faster data access can be achieved through caching data in registers (accessed in 1-5 clock cycles) vs. storing it in slower RAM (10-100 cycles).

SAP BW OLAP cache

In a SAP BW context, the OLAP cache buffers query results sets

retrieved from the database (disk) by storing them in resident memory as

highly compressed cluster data. Why bother storing query results in

memory? The answer is simple – this is a speed contest between disk

access vs. memory access, with memory being the much faster winner. On

both sides of the equation, physical and electronic, there’s compelling

logic that application performance will be improved through maintaining

data in memory, rather than retrieving it from disk. Disk access is one

of the few mechanical (as opposed to electronic) functions integral to

processing and suffers from the slowness of moving parts. On the

software side, disk access also involves a ‘system call’ that is

relatively expensive in terms of performance. The desire to improve

performance by avoiding disk access is the fundamental rationale for

database management system (DBMS) caching and other file system caching

methods. SAP BW is no different – and contains caching at the

application layer to improve OLAP reporting performance.

Users progressively fill the OLAP cache as they access queries. When

a user accesses a query result set for the first time, a database read

from disk takes place and the result set is then written to cache

memory. A cache read action takes place when the same result set (or

subset) is subsequently accessed. Hence, the result set is retrieved

from memory, instead of being retrieved from disk. An important feature

of the OLAP cache is that read/write access to the cache is available

to all users on the application server. All users get the benefits of

faster data retrieval from the cache if another process or user has

previously written the data to it.

The OLAP cache has a physical memory size and hence a storage limit. The cache persistence mode

can be setup to either swap to disk or be overwritten when it’s full.

OLAP cache data is invalidated each time a request is loaded into a

Basic InfoCube; meaning that any Basic InfoCube that is updated daily,

will have its underlying cache data wiped. This makes sense as the

cached query result set data for that InfoCube, whilst accurate as a

historical snapshot is now no longer representative of the current data

in the InfoCube. Hence the old query result set needs to be erased.

This begs the question, when should the cache be filled with the

required query result sets?

Default OLAP Cache

In the default approach post BW install, only users

progressively fill the OLAP cache as they access queries. Users that

access query data for the first time will retrieve data from disk and

not the OLAP cache – meaning they will experience a longer delay than

users subsequently accessing the same data set.

The delay in retrieving query data from the database for the first

user access can be significant for certain queries – a key problem that

needs to be solved. This first access delay is compounded for web

templates or dashboards that execute several queries for the first time

in parallel. The delay is also influenced by the complexity of the

query and your success with other performance optimisation techniques.

Furthermore the delay will occur during user runtime – a particularly

inconvenient time – as we want to shift any delays in accessing data

away from the user’s waking hours. In any case, if this query result delay is significant – then you’ve lost the user already.

Accessing the OLAP Processor

When searching for requested data, a BI query accesses performance

optimised sources in the order shown to the left. The important

take-away is that the OLAP cache is read first, in preference to all

other performance optimisation techniques, even the BI Accelerator.

However, this isn’t to say that these techniques don’t have their place.

Performance optimisation techniques, such as aggregating data,

compressing data, limiting table size, etc. are still vital to any

successful implementation. They are still valid and necessary even with

an OLAP cache. Not only will they help to soften the blow of the first

disk read as previously discussed, but it is impossible and not

efficient to cache all the permutations of query result sets. OLAP

analysis by its very nature is predicated on not being able to predict

every possible query request.

Even if you did try to make such a prediction, you’d most likely end up caching a whole bunch of result sets that:

- User’s will never read; and

- Would be invalidated on a regular basis as the Cubes are updated.

Pre-filling the cache

The idea of pre-filling or ‘warming up’ the OLAP cache is to defer

the database operations away from user run-time to another more

convenient point in time, system time. Such a time could be after the

daily load in the early hours of the morning when few (if any) query

requests are being processed.

The database is going to have to do the work at some point in time to

fill the cache – this much is clear. It could also be argued that this

work, the ‘pre-filling’ the OLAP cache, represents an additional

operation and load on the system in addition to the regular data flow

processes. In my opinion this is probably the wrong way of looking at

the problem. If we can accurately predict what the most common query

requests are going to be, then there will be no additional load on the

system. These query requests would happen in the normal course of

business. Additional load would only be generated if we go overboard

caching every possible query request permutation possible and then that

cached data is never accessed.

To prevent this we should endeavor to restrict data being loaded to

the cache to only likely requests and this will vary from Data Mart to

Data Mart. Tools are available in SAP BW through the Transaction

RSRCACHE to determine which query entries have been read from the cache.

This should be monitored on an ongoing basis to optimise your caching

strategy and remove any poorly performing cache entries from being

continually generated. See Monitoring the OLAP Cache in SAP Documentation for further details.

The most common and convenient way of pre-filling the OLAP cache is

through the BEx broadcaster, although there are several other

approaches, such as:

- Using the Reporting Agent (3.x release) to fill the cache (Transaction Code REPORTING_AGENT). Note that in 7.x a warning message appears stating that this can only be run for 3.x queries and templates, but it works fine with 7.x queries. However, it doesn’t work with 7.x web templates and will not be developed further by SAP; or

- Create a program/function module/web service that executes the relevant queries (See FM RRW3_GET_QUERY_VIEW_DATA );

- Create an Analysis Process to run the relevant queries and output the data to a dummy target such as a DSO.

Each of these methods are able to be inserted into a process chain and hence, can be regularly scheduled.

BEx Broadcasting Approaches:

Some recommended broadcasting approaches that i’ve seen work well on

implementations are listed below. Let me know what approaches have

worked well for you – I’d be interested to hear your feedback.

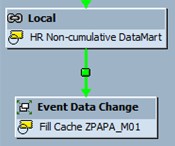

1. Schedule broadcast on data change

Firstly in the BEx Broadcaster schedule the broadcaster to run on a data change event.

Add a data change event to the end of each datamart load process chain.

This event triggers the broadcasts which pre-fill the OLAP cache. In

the data change event you specify which InfoProvider has had it’s data

changed (and hence the OLAP cache has been erased for it) and broadcasts

scheduled on the event for the InfoProvider are triggered.

2. Web Templates

Web templates can contain multiple data providers and hence multiple

queries. Instead of creating individual broadcast settings for each

query in the template, a single global setting can be created for the

template. When scheduled and broadcasted, this web template setting

will run, and hence cache, all of the underlying queries in the template

as they would appear in the dashboard.

Unfortunately for web templates, there isn’t a broadcast distribution

type to fill the OLAP cache. Instead if you’re objective is to only

fill the OLAP cache, another setting such as Broadcast to the Portal

will need to be used. I’d recommend the following settings:

| Distribution Type: | Broadcast to the Portal | ||||

| Output Format: | Online Link to Current Data | ||||

| Authorization User: | ADMIN | ||||

| Export Document to My Portfolio: | Check | ||||

| Export User: | ADMIN |

Using these settings will result in generated online links to the web

template (and not larger files) being posted for the Admin User in the

Business Intelligence Role in My Portfolio/BEx Portfolio/Personal BEx

Documents.

The online links posted in this folder will overwrite each other and

hence preventing a large amount of documents being stored in this

directory over time.

3. Queries

Queries can be individually broadcasted. Unlike with broadcasting a

web template, more settings are available, such as broadcasting by

multiple selection screen variants and by filter navigation on the query

result set, i.e. by a characteristic.

Cache maintenance

Now that you’ve broadcast queries/web templates to the OLAP cache,

you’re going to need to maintain it. To do so, a little more

information is needed about the technical architecture of the SAP BW

OLAP cache. Technically, the OLAP cache consists of two caches, the

local cache and the global cache. These two caches can be setup with

different parameters, such as size. The local cache is accessed by a

single user within a session on an application server. Local cache data

is retained in the roll area as long as it is required by the OLAP

processor for that user. Global cache data is shared by all users

across all application servers. Global cache data is retained for as

long as it is required and will either be deleted when it is no longer

needed, e.g. the underlying data has changed and the cache is

invalidated, or depending on the persistence mode will be swapped to

disk when the cache size is exceeded.

The cache size parameters indicate the maximum size that the local

and global caches are permitted to grow to. The global cache size

should be larger than the local cache size, as the global cache is

accessed across multiple users. The local and global cache size values

should be generally extended from their default settings when you

install SAP BW. This will take advantage of memory available and ensure

that the stored cache entries do not exceed the cache capacity. The

size parameters should be reviewed periodically depending on cache

usage, hit ratio and overflow. The cache size must be appropriate to

manage the frequency of query calls and the number of users. Some

indications that your cache size should be extended are:

- The number of cache entries has filled the capacity of the cache at least once.

- Average number of cache entries corresponds to at least 90% of the capacity or has reached this capacity around 30% of the time.

- Ratio of hits to gets, is lower than 90%.

You can configure the cache parameters using Transaction RSCUSTV14.

Note that the size of the global cache is determined by the minimum

value of the Global Size MB parameter and the actual memory available in

the shared memory buffer (profile parameter rdsb/esm/buffersize_kb). You should therefore use Transaction ST02 to check whether the size of

the export/import buffer is appropriate – as the default setting of

4,096 KB is often too small. SAP recommends the following settings:

- rsdb/esm/buffersize_kb=200000

- rsdb/esm/max_objects=10000

The permitted cache size needs to be realistic though. If you’re

talking in the multiples of gigabytes, you may wish to review why you

need so much data in the cache in the first place. A significant OLAP

processing load would need to take place to generate that much cache

data.

Wrap up

We’ve covered a fair bit of ground on the SAP BW OLAP cache in this

post. Significant performance benefits can be achieved through the

system (and not the user) pre-filling the cache on a

regular basis as the underlying data changes. The benefits are available

to all users as the OLAP analysis results are stored in a central

repository, the OLAP Cache. Furthermore the initial hit on the database

(and subsequent delay) when the query/web template is run the first

time after the data has changed, is taken away from the user and

performed by the system at a more convenient time. A benefit that

they’ll sure be appreciative of first thing in the morning when they

arrive at their desk.

Hope you like this post and useful.

This is really interesting, You are a very skilled blogger. I have joined your feed and look forward to seeking more of your wonderful post. Also, I have shared your site in my social networks!

ReplyDeleteSCCM 2012 Online Training By Realtime Trainer In India

My olap cache is not updated some times when the underlying data is updated which results in incorrect data to users occasionally. This gets corrected overnight but till then for a certain query the data is incorrect. what can i do to fix it

ReplyDeleteMy olap cache is not updated some times when the underlying data is updated which results in incorrect data to users occasionally. This gets corrected overnight but till then for a certain query the data is incorrect. what can i do to fix it

ReplyDelete